Figuring out “what’s going on?” is always something very important. For example, knowing what processes were running on which processors can be very useful if you are doing OS development and/or performances evaluation. If applied to virtualization, that turns into figuring out what VMs were running on which processors (or, better, what virtual CPUs were running on which physical CPUs), during the execution of some workload.

When using Xen as hypervisor, all that is possible by means of two tools: xentrace and xenalyze.

Xentrace

Xen has a number of trace points at key locations to allow developers to get a picture of what is going on inside of Xen. When these trace points are enabled, Xen will write the tracing information into per-cpu buffers within Xen. Then a program in dom0, called xentrace, which sets up and enables tracing, and periodically reads these buffers and writes them to disk. Xentrace is part of the xen.org tree and will come with every distribution of Xen. A very basic, but already quite useful invocation of the tool is the following:

# xentrace -D -e EVT_MASK > trace_file.bin &

run_your_workload

# killall xentrace

where EVT_MASK can be one of the following values:

0x0001f000 Â Â Â Â Â TRC_GEN0x0002f000 Â Â Â Â Â TRC_SCHED0x0004f000 Â Â Â Â Â TRC_DOM0OP0x0008f000 Â Â Â Â Â TRC_HVM0x0010f000 Â Â Â Â Â TRC_MEM0xfffff000 Â Â Â Â Â TRC_ALL

These are Event Classes. Using one of them tells xentrace to gather information about a group of events. For example 0x0002f000 can be used to obtain all the events related to vCPU scheduling. There are other options available, and it is also possible to achieve a finer grain control on the events (for complete list, refer to xentrace -? and/or man xentrace.)

Xenalyze

Unlike xentrace, xenalyze is an external tool, available from its own source code repository (see below). It has been publicly released in 2009 by George Dunlap, and the latest version is always available in this mercurial repository:Â http://xenbits.xen.org/gitweb/?p=xenalyze.git . Getting and installing xenalyze is really worthwhile. There also are slides and videos explaining what it is and what it does, so go check them out! 🙂

Reaching out to the code and getting it ready is really simply a matter of the following couple of commands:

$ hg clone http://xenbits.xensource.com/ext/xenalyze

$ cd xenalyze

$ make && sudo cp xenalyze /usr/local/bin

Done. From that point on, you can use xenalyze on a xentrace recorded binary trace to squeeze all the meaningful information hidden therein. In fact, those traces typically contain a lot of unordered records and thus, no matter whether you process them in binary or text format, it would be very hard to make any sense out of them. Xenalyze does exactly that, and to see how many different kind of analysis and reports it is able to produce, give it a try to xenalyze --usage!

For a short description of the various options, use xenalyze --help. (Of course, not all the options can be used on all traces, it depends on what events were enabled when the trace itself has been generated.) More useful information available by doing this (in the source directory):

$ firefox xenalyze.html

So, suppose you need an overview of the pCPUs utilization during the execution of a benchmark:

$ xenalyze --report-pcpu trace_file.bin

pcpu 0

running: Â 16240 73.28s 10829964 {239723648|239725972|872666178}

idle: Â 13965 176.03s 30252638 {239714420|962049840|1666942566}

pcpu 1

running: Â Â 4029 48.56s 28923656 {143170364|657235616|935623218}

idle: Â Â 3113 200.71s 154739351 {81727479|914900546|1438799076}

pcpu 2

running: Â Â 7320 86.64s 28407609 {545201172|1517387932|1466950626}

idle: Â Â 7121 162.63s 54811022 {120038136|2397666934|2398360236}

pcpu 3

running: Â Â 3987 59.77s 35977004 {407209880|597988052|598338384}

idle: Â Â 3484 189.52s 130552532 {597978696|598191824|598367142}

...

What? You also want a summary of what a happened to a particular domain, i.e., how much it run, where, and things like that? Here it comes, along with the time it spent in the various states and on the various pCPUs (and their percentiles!):

$ xenalyze --summary trace_file.bin

|-- Domain 24 --|

Runstates:

blocked: Â Â 1731 Â 3.64s 5044857 {11555862|276556494|686417912}

partial run: Â 18892 40.77s 5178744 {4586808|71684614|71951444}

full run: Â 31249 203.81s 15652886 { 60468|71694234|71830486}

partial contention:   1991  0.06s  71522 {  9948|635772|1109896}

concurrency_hazard: Â 18485 Â 0.89s 115022 {142444|280602|2692020}

full_contention: Â Â Â 26 Â 0.00s 129213 {407956|419344|419344}

Grant table ops:

Done by:

Done for:

-- v0 --

Runstates:

running: Â 23455 226.56s 23182581 {71947660|71948692|71956948}

runnable:  16406  0.68s  99306 {  9764|821916|858156}

wake:  14917  0.48s  76999 { 14914|150230|268290}

preempt: Â Â 1489 Â 0.20s 322779 {209428|1272116|2692020}

blocked: Â 14894 21.81s 3513866 {4573994|4583040|120036430}

offline:    23  0.00s  11669 {  9628| 14436| 22300}

lost: Â Â Â 1 Â 0.00s 700212 {700212|700212|700212}

cpu affinity: Â Â 130 4600620332 {7769902|1393431440|17955497276}

[0]: Â Â Â 39 1986387216 {19217192|1393431440|9576248402}

[1]: Â Â Â 42 6173329986 {28728080|1857893610|25743121114}

[2]: Â Â Â 14 2349742262 {6796684|1185646680|13186249258}

[3]: Â Â Â 15 7585020731 {1664152|710431216|26295455632}

[4]: Â Â Â 9 8233222749 {126628078|4843711610|25163611846}

[5]: Â Â Â 6 4760749294 {506508|835562790|17954634956}

[6]: Â Â Â 2 1430923246 {13989670|2847856822|2847856822}

[7]: Â Â Â 3 3044877543 {1219457918|2563417230|5351757482}

...

Last but not least, xenalyze can tell you the pCPU where each vCPU of each VM was running at any given time instant, as shown below. The vCPU is in the first column (format “vm v vcpu_of_the_vm“), time is in the middle and the pCPU is the last field:

$ xenalyze --scatterplot-pcpu trace_file.bin

0v11 0.018407919 11

0v0 0.018413500 0

0v1 0.024310861 1

0v2 0.024325698 2

0v11 0.024352327 11

0v11 0.024352327 11

0v3 0.024603944 3

0v3 0.024603944 3

24v1 0.029814047 7

0v2 0.030475882 2

0v2 0.030475882 2

0v3 0.030902385 3

0v3 0.030902385 3

0v1 0.049119711 1

0v1 0.049119711 1

23v1 0.085960228 8

23v1 0.086143025 8

23v1 0.086143025 8

23v0 0.086189784 13

0v11 0.118445979 11

0v11 0.118445979 11

0v3 0.138924786 3

0v3 0.138924786 3

0v0 0.139847059 0

...

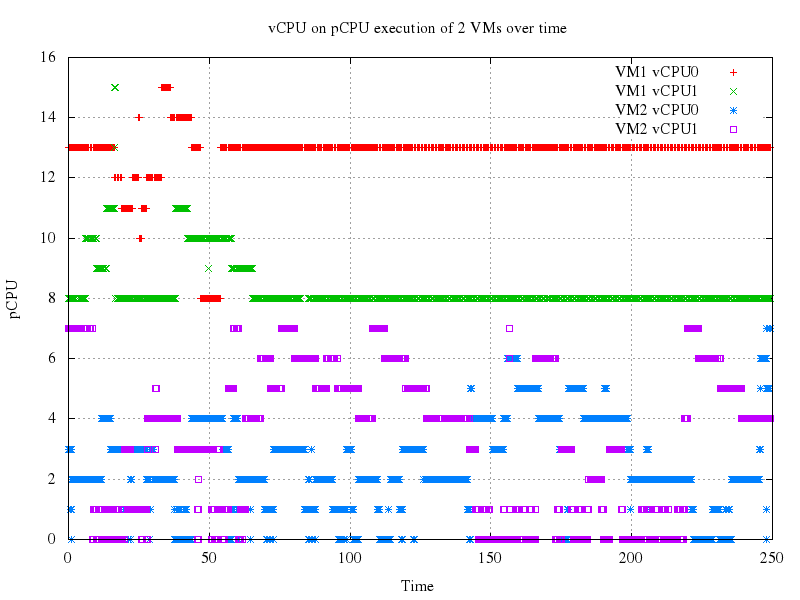

It is then easy to feed such output to gnuplot and produce a graph with time on the x axis, pCPUs on the y axis and where each set of points represents a vCPU. That makes it visually evident on what pCPUs the various vCPUs were running during the traced period.

In this (simple) case, for instance, we can see that VM1 is running only on pCPUs 8-15 and VM2 only on pCPUs 0-7.