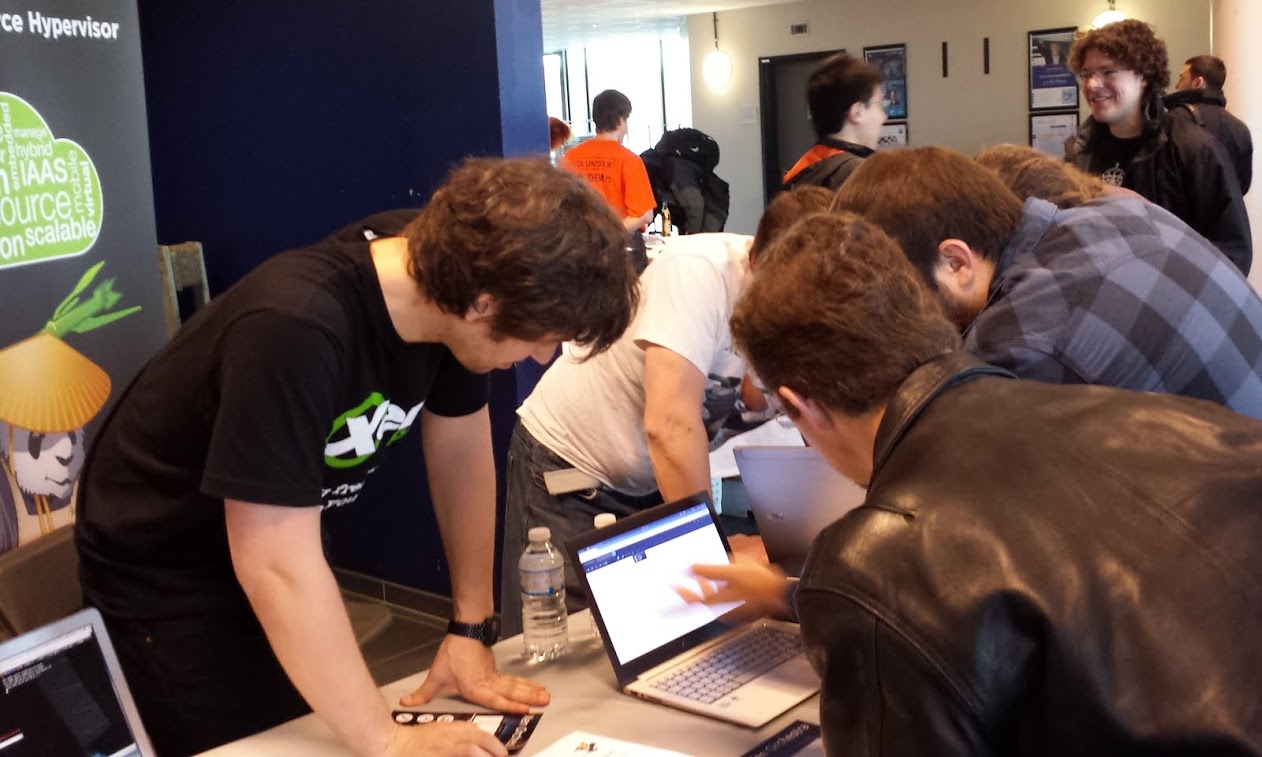

We were extremely thrilled to host our Xen Project Developer and Design Summit in Nanjing Jiangning, China this June. The event brought together our community and power users under one…

Read More

Power management in the Xen Project Hypervisor historically targets server applications to improve power consumption and heat management in data centers reducing electricity and cooling costs. In the embedded space,…

Read More

Root Linux Conference is coming to Kyiv, Ukraine on April 14th. The conference is the biggest Linux and embedded conference in Eastern Europe with presenters exploring topics like: Linux in…

Read More

Registration and the call for proposals are open for the Xen Project Developer and Design Summit 2018, which will be held in Nanjing Jiangning, China from June 20 - 22,…

Read More

Xen on ARM is becoming more and more widespread in embedded environments. In these contexts, Xen is employed as a single solution to partition the system into multiple domains, fully…

Read More

As part of my Xen Around the World Project, I am posting a Google Map for everyone to add a Placemark and comment on where you are using Xen. I…

Read More